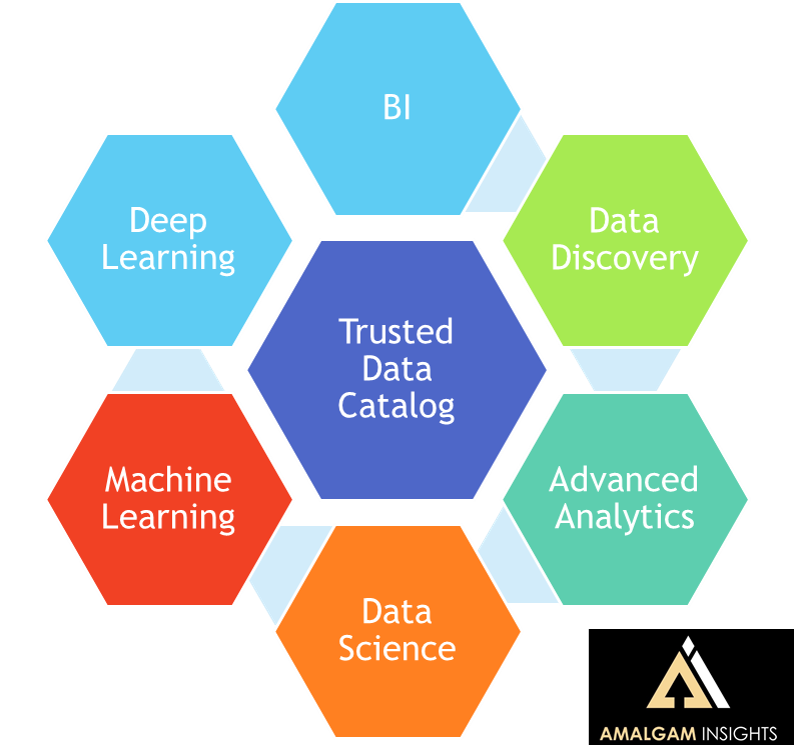

In early June, Amalgam Insights attended Alteryx Inspire ‘18, where Alteryx Chairman and CEO Dean Stoecker led an energetic keynote to inspire their users to “Alter(yx) Everything.” Based on conversations I had with Alteryx executives, partners, and end-users, I came away with the strong impression that Alteryx wants to make advanced analytics and data science tasks as easy and quick as possible for a broad audience that may not know code – and they want to expand that community and its capabilities as quickly as possible. Data scientists and analytics-knowledgeable employees are in high demand, and the shortage is projected to worsen as the demand for these capabilities grows; data is growing faster than the existing data analyst and data scientist community can keep up with it.

Workday Surprises the IPO Market and Acquires Adaptive Insights

Key Stakeholders: Chief Information Officers, Chief Financial Officers, Chief Operating Officers, Chief Digital Officers, Chief Technology Officer, Accounting Directors and Managers, Sales Operations Directors and Managers, Controllers, Finance Directors and Managers, Corporate Planning Directors and Managers

Key Stakeholders: Chief Information Officers, Chief Financial Officers, Chief Operating Officers, Chief Digital Officers, Chief Technology Officer, Accounting Directors and Managers, Sales Operations Directors and Managers, Controllers, Finance Directors and Managers, Corporate Planning Directors and Managers

Why It Matters: Workday snatched Adaptive Insights away from the public markets only days before IPO, acquiring a proven enterprise planning company, a trained enterprise sales team, and a team deeply skilled in ERP and enterprise application integration.

Top Takeaway: Amalgam believes Workday’s acquisition of Adaptive Insights provides a net-win for current Adaptive Insights customers, Workday customers seeking more resources dedicated both to financial planning and workforce planning, and Adaptive Insights partners looking for enterprise product enhancements.

On June 11, 2018, Workday announced signing a definitive agreement to purchase Adaptive Insights, a cloud-based business planning platform. Workday will pay about $1.55 billion to fully acquire all shares of Adaptive Insights. This acquisition occurs only three days before Adaptive Insights was scheduled for a $115 million IPO on Thursday, June 14th, which was estimated to value the company at $705 million. This IPO was expected to be successful based on Adaptive Insights’ strong subscription revenue & revenue renewal metrics described in the S-1. With this acquisition, Tom Bogan will continue to lead the Adaptive Insights business unit and report to Workday CEO Aneel Bhusri and the Adaptive Insights team is expected to remain relatively intact.

Amalgam provides further details in the Market Milestone Workday Acquires Adaptive Insights and Gets a Leg Up On Oracle NetSuite where we explore:

Amalgam provides further details in the Market Milestone Workday Acquires Adaptive Insights and Gets a Leg Up On Oracle NetSuite where we explore:

- Adaptive Insights as a pure-play Cloud EPM player

- Adaptive Insights’ relationship with NetSuite

- What Workday gains by purchasing Adaptive Insights

- What Adaptive Insights’ customers and partners should expect

- Who wins and loses from this acquisition

SaaS Vendor and Expense Management on Display at Oktane 18

Key Stakeholders: CIO, CFO, Chief Digital Officer, Chief Technology Officer, Chief Mobility Officer, IT Asset Directors and Managers, Procurement Directors and Managers, Accounting Directors and Managers

Key Stakeholders: CIO, CFO, Chief Digital Officer, Chief Technology Officer, Chief Mobility Officer, IT Asset Directors and Managers, Procurement Directors and Managers, Accounting Directors and Managers

Why It Matters: Okta is a key enabler for the discovery and management of SaaS, which is a necessary enabler for establishing the SaaS inventory and user identities needed to optimize costs. Amalgam noted that several leading SaaS vendor management solutions including Flexera, Torii, and Zylo attended Oktane 18 to speak to savvy IT executives seeking to control SaaS and Cloud expenses.

Top Takeaways: In the past year, the SaaS Vendor Management market has quickly expanded in quality, showing the increasing demand for solutions and maturity of this market. As Oktane has evolved into a hub for SaaS management, this show allows SaaS Vendor Management providers to speak to relevant digital innovators and architects responsible for being IT financial stewards. In the past year, the SaaS Vendor Management market has quickly expanded in quality, showing the increasing demand for solutions and maturity of this market.

At Oktane 18, Amalgam spoke with three leading SaaS Vendor Management solutions: Flexera/Meta SaaS, Torii, and Zylo. From Amalgam’s perspective, one of the most interesting aspects of these meetings was how each vendor had a different focus and perspective despite the fact that these vendors theoretically “do the same thing.” The side-by-side comparison of these vendors provided interesting differentiation in a market that is quickly expanding. To see how these vendors differed and to find out about an additional Baker’s Dozen of vendors that Amalgam tracks as SaaS Vendor Management solutions or products with a SaaS Vendor Management component, Click here to read the full report.

Why Oktane Has Become The Most Important SaaS Event of the Year

Key Stakeholders: Chief Information Officers, Chief Digital Officers, Chief Information Security Officers, Security Directors and Managers , Security Operations Directors, IT Architects, IT Strategists, Identity and Access Directors and Managers, Software Engineers, Cloud Strategists

Key Stakeholders: Chief Information Officers, Chief Digital Officers, Chief Information Security Officers, Security Directors and Managers , Security Operations Directors, IT Architects, IT Strategists, Identity and Access Directors and Managers, Software Engineers, Cloud Strategists

Why This Matters: Cloud and digital strategists who are not attending Oktane risk missing out on key SaaS and IT management strategies emerging in an end-user centric model of IT.

Continue reading Why Oktane Has Become The Most Important SaaS Event of the Year

Optimizing Sales Enablement and Sales Training by Leveraging Learning Science: A Brief Primer

Key Stakeholders: Chief Sales Officer, Sales Directors and Managers, Sales Operations Directors and Managers, Training Officers, Learning and Development Professionals

Key Stakeholders: Chief Sales Officer, Sales Directors and Managers, Sales Operations Directors and Managers, Training Officers, Learning and Development Professionals

Top Takeaways: If you want an efficient sales team you need to provide them with the right tools and train them effectively. If you want sales enablement and training tools that are highly effective they need to be grounded in learning science – the marriage of psychology and brain science. Many sales teams and sales focused vendors have access to these tools. What is needed is an effective way to leverage these tools to maximum advantage. This is where learning science comes in. In this report, I briefly outline the learning science behind sales enablement, people skills training and situational awareness.

(Note: This report represents the first step in an ongoing research initiative focused on critically evaluating the effectiveness of sales enablement and sales training solutions on the market. My goal in this research is to critically evaluate and accurately reflect the current state of the sales enablement and sales training sector from the perspective of learning science.)

Continue reading Optimizing Sales Enablement and Sales Training by Leveraging Learning Science: A Brief Primer

Cloudera Improves Enterprise Rigor and Reuse by Putting the “Science” in Data Science Workbench

Key Stakeholders: IT managers, data scientists, data analysts, database administrators, application developers, enterprise statisticians, machine learning directors and managers, existing enterprise Cloudera customers

Why It Matters: As Cloudera continues its pivot towards becoming a full-service machine learning and analytics platform, its latest updates enhance its ability to retain existing customers of its commercial data lake and Hadoop distribution looking to expand into data science workflows, and attract net-new data science customers.

Top Takeaway: Cloudera’s additions to its Data Science Workbench provide a more rigorous, scientific approach to data science than prior versions, and allow for speedier implementation of results into enterprise software applications.

Cloudera’s announcements at Strata London in late May reflect the next steps in its transformation from a Hadoop distribution and commercial data lake into a full-service machine learning and analytics platform. Key to this transformation are two new capabilities in Cloudera Data Science Workbench: Experiments, which introduces versioning to DSW, and Models, which streamlines and standardizes the model deployment process. Both of these capabilities add rigor and reproducibility to the data science process.

To read the full report, please download it from our research library.

Microsoft Azure Plus Informatica Equals Cloud Convenience

Two weeks ago (May 21, 2018), at Informatica World 2018, Informatica announced a new phase in its partnership with Microsoft. Slated for release in the second half of 2018, the two companies announced that Informatica’s Integration Platform as a Service, or IPaaS, would be available on Microsoft Azure as a native service. This is a different arrangement than Informatica has with other cloud vendors such as Google or Amazon AWS. In those cases, Informatica is more of an engineering partner, developing connectors for their on-premises and cloud offerings. Instead, Informatica IPaaS will be available from the Azure Portal and integrated with other Azure services, especially Azure SQL Server, Microsoft’s cloud database and Azure SQL Data Warehouse.

Continue reading Microsoft Azure Plus Informatica Equals Cloud Convenience

Informatica Prepares Enterprise Data for the Era of Machine Learning and the Internet of Things

From May 21st to May 24th, Amalgam Insights attended Informatica World. Both my colleague Tom Petrocelli and I were able to attend and gain insights on present and future of Informatica. Based on discussions with Informatica executives, customers, and partners, I gathered the following takeaways.

Informatica made a number of announcements that fit well into the new era of Machine Learning that is driving enterprise IT in 2018. Tactically, Informatica’s announcement of providing its Intelligent Cloud Services, its Integration Platform as a Service offering, natively on Azure represents a deeper partnership with Microsoft. Informatica’s data integration, synchronization, and migration services go hand-in-hand with Microsoft’s strategic goal of getting more data into the Azure cloud and shifting the data gravity of the cloud. Amalgam believes that this integration will also help increase the value of Azure Machine Learning Studio, which now will have more access to enterprise data.

Continue reading Informatica Prepares Enterprise Data for the Era of Machine Learning and the Internet of Things

Why Corporate Learning Solutions Ignore Brain Science and Create Corporate Adoption Gaps

Key Stakeholders: Chief Learning Officers, Chief Human Resource Officers, Learning and Development Directors and Managers, Corporate Trainers, Content and Learning Product Managers

Key Stakeholders: Chief Learning Officers, Chief Human Resource Officers, Learning and Development Directors and Managers, Corporate Trainers, Content and Learning Product Managers

Key Takeaways:

- Learning & Development (L&D) vendors offer a number of amazing technologies but minimal, if any, scientifically-validated best practices to guide clients on what to use when. This is an oversight and one that can be remedied by leveraging learning science—the marriage of psychology and brain science.

- Even the vendors beginning to embrace brain science stop at the prefrontal cortex, hippocampus, and medial temporal lobe structures that have evolved for hard skills learning. This is unacceptable as vendors effectively ignore people (aka soft) skills and the emotional aspects of learning that are mediated by different brain regions with distinct processing characteristics.

- To close the adoption gap in learning technologies, L&D vendors must start to embrace all systems of learning in the brain, and customers must demand better guidance and scientifically-validated best practices.

Tangoe Makes Its Case as a Change Agent at Tangoe LIVE 2018

Key Stakeholders: CIO, CFO, Controllers, Comptrollers, Accounting Directors and Managers, IT Finance Directors and Managers, IT Expense Management Directors and Managers, Telecom Expense Management Directors and Managers, Enterprise Mobility Management Directors and Managers, Digital Transformation Managers, Internet of Things Directors and Managers

On May 21st and 22nd, Amalgam Insights attended and presented at Tangoe LIVE 2018, the global user conference for Tangoe. Tangoe is the largest technology expense management vendor with over $38 billion in technology spend under management.

Tangoe is in an interesting liminal position as it has achieved market dominance in its core area of telecom expense management where Amalgam estimates that Tangoe manages as much technology spend as its five largest competitors combined (Cass, Calero, Cimpl, MDSL, and Sakon). However, at the same time, Tangoe is being asked by its 1200+ clients how to better manage a wide variety of spend categories, including Software-as-a-Service, Infrastructure-as-a-Service, IoT, managed print, contingent labor, and outsourced contact centers. Because Tangoe is in this middle space, Amalgam finds Tangoe to be an interesting vendor. In addition, Tangoe’s average client is a 10,000 employee company with roughly $2 billion in revenue, placing Tangoe’s focus squarely on the large, global enterprise.

In this context, Amalgam analyzed both Tangoe’s themes and presentations in this context. First, Tangoe LIVE started with a theme of “Work Smarter” with the goal of helping clients to better understand changes in telecom, mobility, cloud, IoT, and other key IT areas.

Tangoe Themes and Keynotes

CEO Bob Irwin kicked off the keynotes by describing Tangoe’s customer base as 1/3rd fixed telephony, 1/3rd mobile-focused, and 1/3rd combined fixed and mobile telecom. This starting point shows Tangoe’s potential in growing simply by focusing on existing clients. In theory, Tangoe could double its revenue and spend under management without adding another client based purely on this point alone. This fact alone makes Tangoe difficult to measure, as much of its potential growth is based on execution rather than the market penetration that most of Tangoe’s competitors are trying to achieve. Irwin also brought up three key disruptive trends of Globalization, Uberization (real-time and localized shared services), and Digital Transformation. On the last point, Irwin noted that 65% of Tangoe customers were currently going through some level of Digital Transformation.

Next, Chief Product Officer Ivan Latanision took the stage. Latanision is relatively new to Tangoe with prior experience with clinical development, quality management, e-commerce, and financial services which should prove useful in supporting Tangoe’s product portfolio and the challenges of enterprise governance. In his keynote, Latanision introduced the roadmap to move Tangoe clients to one of three platforms: Rivermine, Asentinel, or the new Atlas platform being developed by Tangoe. This initial migration will be based on whether the client is looking for deeply configurable workflows, process automation, or net-new deployments with a focus on broad IT management, respectively. Based on the rough timeline provided, Amalgam expects that this three-platform strategy will be used for the next 18-24 months. Then, starting sometime in 2020, Amalgam expects that Tangoe will converge customers to the new Atlas platform.

SVP of Product Mark Ledbetter followed up with a series of demos to show the new Tangoe Atlas platform. These demos were run by product managers Amy Ramsden, Cynthia Flynn, and Chris Molnar and demonstrated how Atlas would work from a user interface, asset and service template, and cloud perspective. Amalgam’s initial perspective was that this platform represented a significant upgrade over Tangoe’s CMP platform and was on par with similar efforts that Amalgam has seen from a front-end perspective.

Product Management guru Michele Wheeler also came out to thank the initial customers who have been testing out Atlas in a production environment. Amalgam believes this is an important step forward for Tangoe in that it shows that Atlas is a workable environment and has a legitimate future as a platform going forward. The roadmap for Tangoe customers has been somewhat of a mystery in the past only because there was a wide variety of platforms to integrate and aggregate from the M&A activity that Tangoe had previously participated in. Chief of Operations, Tom Flynn completed the operational presentation by providing updates on Tangoe’s service delivery and upgrades. Flynn had previously acted as Tangoe’s Chief Administrative Officer and General Counsel, which makes this shift in responsibility interesting as Flynn now gets to focus on service delivery and process management rather than the governance, risk management, and compliance issues where Flynn previously was guiding Tangoe.

Thought Leadership Keynotes at Tangoe LIVE

Tangoe also featured two celebrity keynotes focused on the importance of innovation and preparing for the future. Amalgam believes these keynotes were well-suited for the fundamental challenge that much of the audience faced in figuring out how to expand their responsibilities beyond the traditional view of supporting network, telecom, and mobility spend to the larger world of holistic enterprise technology spend. Innovation expert Stephen Shapiro provided the audience with a focus on how to thinking outside of the boundaries that we lock ourselves into by looking at other departments, industries, and categories for new solutions. Business advisor and industry analyst Maribel Lopez followed up on this theme the next day by framing our current challenge of technology as both the Best of Times and the Worst of Time in determining how technology can effectively empower workers. By providing a starting point for achieving the goal of digital mastery, Lopez challenged the audience to take on the challenge of controlling technology and maximizing its value rather than becoming overwhelmed by the variety, scale, and scope of technology.

Amalgam’s Role at Tangoe LIVE

Amalgam Insights also provided two presentations at Tangoe LIVE: “Budgeting and Forecast for Technology Trends” and “The Convergence of Usage Management and IoT,” which was co-presented with Tangoe’s Craig Riegelhaupt.

In the Budgeting and Forecast presentation, Amalgam shared key trends that will affect telecom and mobility budgeting over the rest of 2018 and 2019 including:

• USTelecom’s Petition for Forbearance to the FCC, which would allow large carriers to immediately raise the resale rate of circuits by 15% and potentially allow them to stop reselling circuits to smaller carriers altogether

• Universal Service Fee variances and the potential to avoid these costs by changing carriers or procure circuits more effectively

• The 2 GB limit for rate plans and how this will affect rate plan optimization

• The T-Mobile/Sprint Merger and its potential effects on enterprise telephony

• The wild cards of cloud and IoT as expense categories

• Why IoT Management is the ultimate Millennial Reality Check

Amalgam also teamed up with Tangoe to dig more deeply into the Internet of Things and why IoT will be a key driver for transforming mobility management over the next five years. By providing representative use cases, connectivity options, and key milestone metrics, this presentation provided Tangoe end users with an introductory guide to how their mobility management efforts needed to change to support IoT.

Tangoe’s Center of Excellence and Amalgam Insights’ Role

At Tangoe LIVE, Tangoe also announced the launch of the Tangoe Center of Excellence, which will provide training courses in topics including inventory management, expense management, usage management, soft skills, & billing disputes with a focus on providing guidance from industry experts.

As a part of this launch, Tangoe announced that Amalgam Insights is a part of Tangoe’s COE Advisory Board. In this role, we look forward to helping Tangoe educate its customer base on the key market trends associated with managing digital transformation and creating an effective “manager of managers” capability to effectively support the quickly-expanding world of enterprise technology. These classes will start in the Fall of 2018 and Amalgam is excited to support this effort to teach best practices to enterprise IT organizations.

Conclusion

Tangoe LIVE lived up to its billing as the largest Telecom and Technology Expense Management user show. But even more importantly, Tangoe was able to show its Atlas platform and provide a framework for users that are being pushed to support change, transformation, and new spend categories. Amalgam believes that the greatest challenge over rest of this decade for TEM managers is how they will support the emerging trends of cloud, IoT, outsourcing, and managed services as all of these spend categories require management and optimization. In this show, Tangoe LIVE made its case that Tangoe intends to be a platform solution to support this future of IT management and support its customers across invoice, expense, service order, and usage management.

Although Tangoe’s official theme for this show was “Work Smarter,” Amalgam believes that the true theme for this show based on keynotes, roadmap, sessions, and end user questions was “Prepare for Change” with the tacit assumption of doing this with Tangoe. In facing this question head on and providing clear guidance and advisory, Tangoe made a compelling case for managing the future of IT at Tangoe LIVE.