Amalgam Insights recently had the privilege of attending Informatica World 2024. This is a must-track event for every data professional if for no other reason than Informatica’s market leadership across data integration, master data management, data catalog, data quality, API management, and data marketplace offerings. It is hard to have a realistic understanding of the current state of enterprise data without looking at where Informatica is. And at a time when data is front-and-center as the key enabler for high-quality AI tools, 2024 is a year where companies must be well-versed in the various levels of data governance, management, and augmentation needed to make enterprise data valuable.

Of course, Informatica has embraced AI fully, almost to the point where I wonder if there will be a rebrand to AInformatica later this year! But all kidding aside, my focus in listening to the opening keynote was in hearing about how CEO Amit Walia and a group of select product leaders, customers, and partners would help build the case for how Informatica increases business value from the CFO office’s perspective.

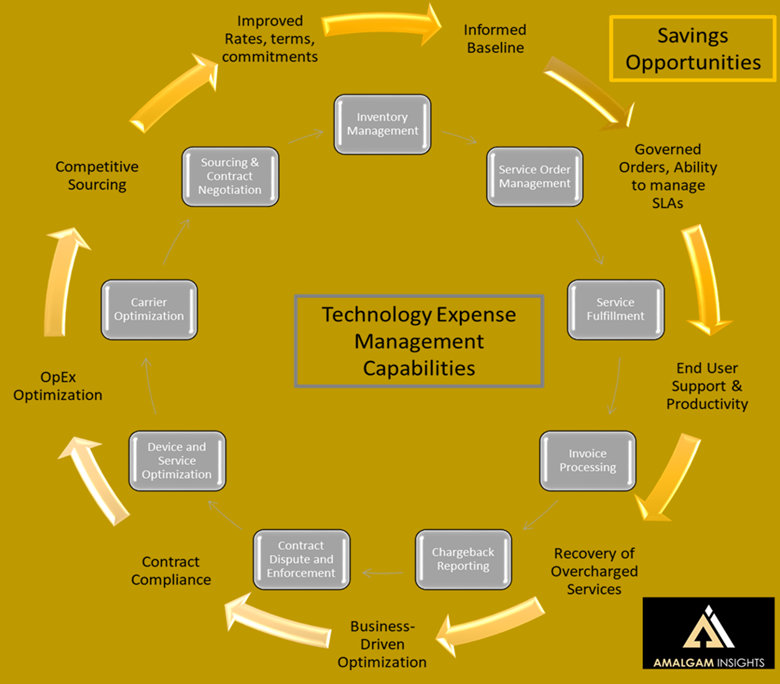

Of course, there are a variety of ways to create value from a Data FinOps (the financial operations for data management) perspective, such as eliminating duplicate data sources, reducing the size of data through quality and cleansing efforts, optimizing data transformation and analytic queries, enhancing the business context and data outputs associated with data, and increasing the accessibility, integration, and connectedness of long-tail data to core data and metadata. But in the Era of AI, there is one major theme and Informatica defined exactly what it is.

Everybody’s ready for AI except your data.

Informatica kicked off its keynote with an appeal to imagination and showing “AI come to life” with the addition of relevant, high-quality data. Some of CEO Amit Walia’s first words were in warning that AI does not create value and is vulnerable to negative bias, lack of trust, and business risks without access to relevant and well-contextualized data. His assertion that data management (of course, an Informatica strength) “breathes life into AI” is both poetic and true from a practical perspective. The biggest weakness in enterprise AI today is the lack of context and anchoring because of dirty data and missing metadata that were ignored in an era of Big Data when we threw everything into a lake and hoped for the best. Informatica faces the challenge of cleaning up the mess created over the past decade as both the number of apps and volume of data have increased by an order of magnitude.

From a customer perspective, Informatica provided context from two Chief Data Officers during this keynote: Royal Caribbean’s Rafeh Masood and Takeda’s Barbara Latulippe. Both spoke about the need to be “AI Ready” with a focus on starting with a comprehensive data management and integration strategy. Masood’s 4Cs strategy for Gen AI of Clarity, Connecting the Dots, Change Management, and Continual Learning spoke to the fundamental challenges of anchoring AI with data and creating a data-driven culture to get to AI. As Amit Walia stated at the beginning: everybody is ready for AI except your data.

Latulippe’s approach at Takeda provided some additional tactics that should resonate with financial buyers, such as moving to the cloud to reduce data center sites, purchasing data from a variety of sources to augment and improve the value of corporate data as an asset, and consolidating data vendors from eight to two and increasing the operational role of Informatica within the organization in the process. Latulippe also mentioned a 40% cost reduction from building a unified integration hub and a data factory investment that provided a million dollars in savings from improved data preparation and cleansing. (In using these metrics as a guidepost for potential savings, Amalgam Insights cautions that the financial benefits associated with the data factory are dependent on the value of the work that data engineers and data analysts are able to pursue by avoiding scut work: some companies may not have additional data work to conduct while others may see even greater value by shifting labor to AI and high business value use cases.)

Amit Walia also brought four of Informatica’s product leaders on stage to provide roadmaps across Master Data Management, Data Governance, Data Integration, and Data management. Manouj Tahilani, Brett Roscoe, Sumeet Agrawal, and Gaurav Pathak walked the audience through a wide range of capabilities, many of which were focused on AI-enhanced methods of tracking data lineage, creating pipelines and classifications, and improved metadata and relationship creation above and beyond what is already available with CLAIRE, Informatica’s AI-powered data management engine.

Finally, the keynote ended with what has become a tradition: enshrining the Microsoft-Informatica relationship with a discussion from a high-level Microsoft executive. This year, Scott Guthrie provided the honors in discussing the synergies between Microsoft Fabric and Informatica’s Data Management Cloud.

Recommendations for the CFO Looking at Data Challenges and CIOs seeking to be financial stewards

Beyond the hype of AI is a new set of data governance and management responsibilities that must be pursued if companies are to avoid unexpected AI bills and functional hallucinations. Data environments must be designed so that all business data can now be used to help center and contextualize AI capabilities. On the FinOps and financial management side of data, a couple of capabilities that especially caught my attention were:

IPU consumption and chargeback: The Informatica Data Management Cloud, the cloud-based offering for Informatica’s data management capabilities, is priced in Informatica Pricing Units based on its billing schedule. The ability to now chargeback capabilities to departments, locations, and relevant business units is increasingly important in ensuring that data is fully accounted for as an operational cost or as a cost of goods sold, as appropriate. The Total Cost of Ownership for new AI projects cannot be fully understood without understanding the data management costs involved.

Multiple mentions of FinOps, mostly aligned to Informatica’s ability to optimize data processing and compute configurations. CLAIRE GPT is expected to further help with this analysis as it provides greater visibility to the data lineage, model usage, data synchronization, and other potential contributors to high-cost transactions, queries, agents, and applications.

And the greatest potential contribution to data productivity is the potential for CLAIRE GPT to accelerate the creation of new data workflows with documented and governed lineage from weeks to minutes. This “weeks to minutes” value proposition is fundamentally what CFOs should be looking for from a productivity perspective rather than more granular process mapping improvements that may promise to shave a minute off of a random process. Grab the low-hanging fruit that will result in getting 10x or 100x more work done in areas where Generative AI excels: complex processes and workflows defined by complex human language.

CFO’s should be aware that, in general, we are starting to reach a point where every standard IT task that has traditionally taken several weeks to approve, initiate, assign resources, write, test, govern, move to production, and deploy in an IT-approved manner is becoming either a templated or a Generative AI supported capability that can be done in a few minutes. This may be an opportunity to reallocate data analysts and engineers to higher-level opportunities, just as the self-service analytics capabilities a decade ago allowed many companies to advance their data abilities from report and dashboard building to higher-level data analysis. We are about to see another quantum leap in some data engineering areas. This is a good time to evaluate where large bottlenecks exist in making the company more data-driven and to invest in Generative AI capabilities that can quickly help move one or more full-time equivalents to higher value roles such as product and revenue support or optimizing data environments.

Based on my time at Informatica World, it was clear that Informatica is ready to massively accelerate standard data quality and governance challenges that have been bottlenecks. Whether companies are simply looking for a tactical way to accelerate access to the thousands of apps and data sources that are relevant to their business or if they are more aggressively pursuing AI initiatives in the near term, the automation and generative AI-powered capabilities introduced by Informatica provide an opportunity for companies to step forward and improve the quality and relevance of their data in a relatively cost-effective manner compared to legacy and traditional data management tools.