In June of 2023, we are firmly in the midst of the highest of hype levels for Generative AI as ChatGPT has taken over the combined hype of the Metaverse, cryptocurrency, and cloud computing. We now face a deluge of experts who all claim to be “prompt engineering” experts and can provide guidance on the AI tools that will make your life easier to live. At the same time, we are running into a cohort of technologists who warn that AI is only one step away from achieving full sentience and taking over the world as an apocalyptic force.

In light of this extreme set of hype drivers, the rest of us do face some genuine business concerns associated with generative AI. But our issues are not in worshipping our new robot overloads or in the next generation of “digital transformation” focused on the AI-driven evolution of our businesses that lay off half the staff. Rather, we face more prosaic concerns regarding how to actually use Generative AI in a business environment and take advantage of the productivity gains that are possible with ChatGPT and other AI tools.

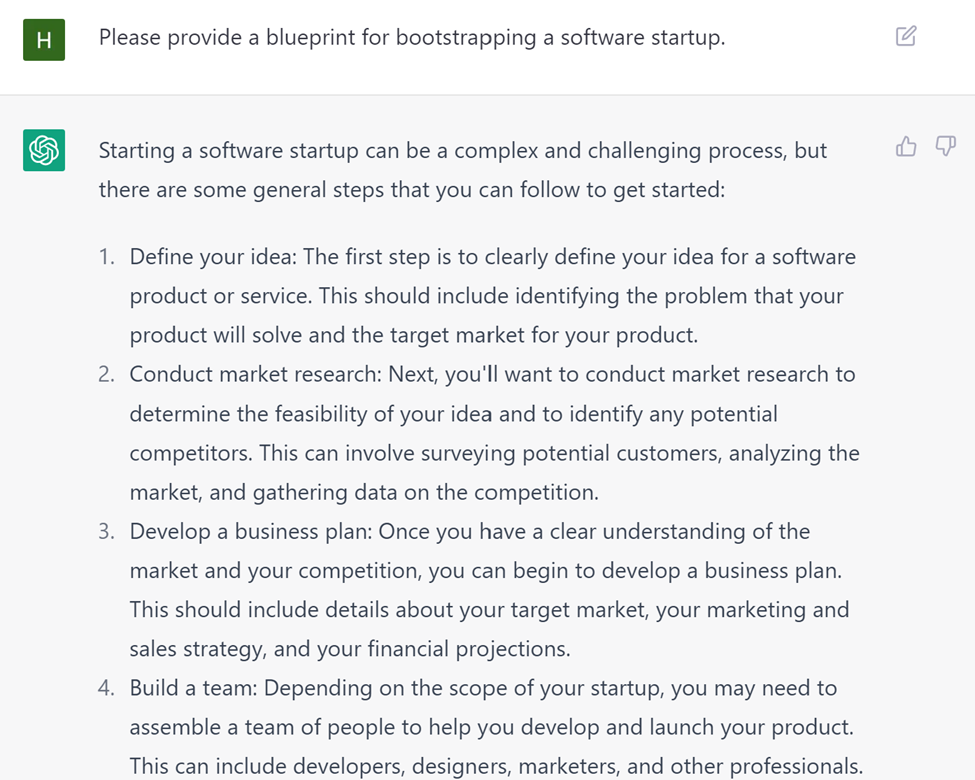

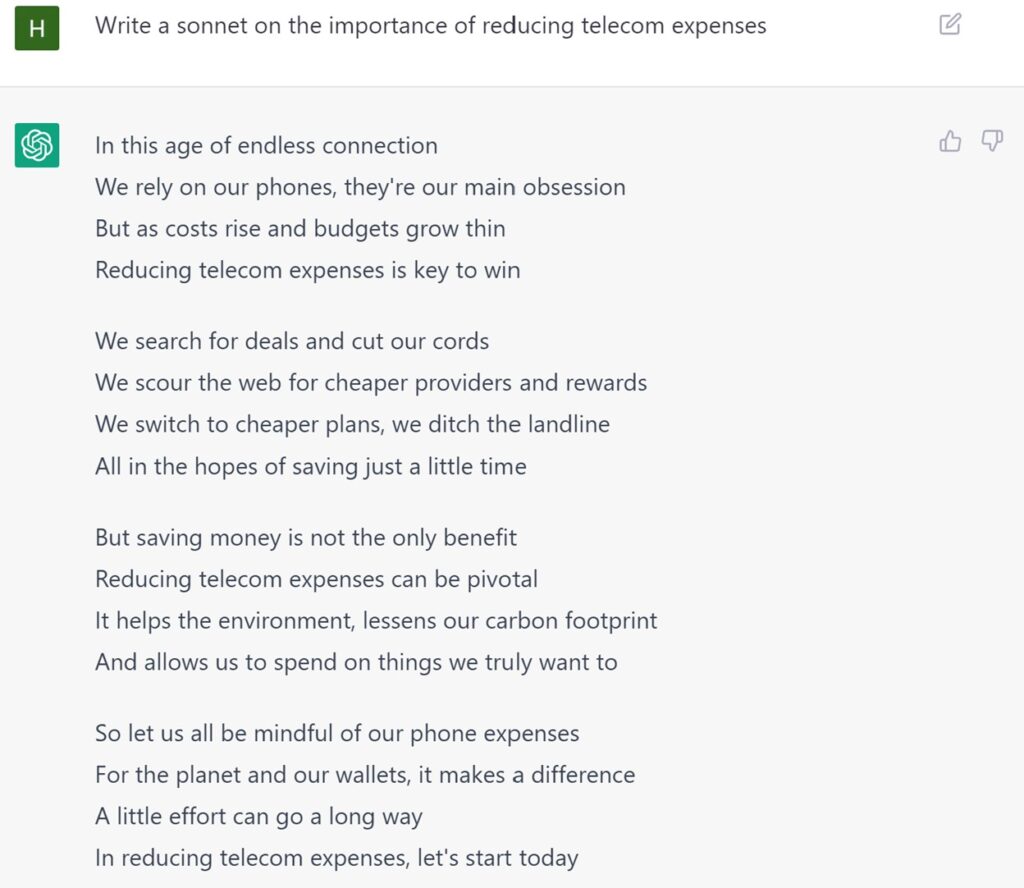

Anybody who has used ChatGPT in their areas of expertise has quickly learned that ChatGPT has a lot of holes in its “knowledge” of a subject that prevent it from providing complete answers, timely answers, or productive outputs that can truly replace expert advice. Although Generative AI provides rapid answers with a response rate, cadence, and confidence that mimics human speech, it often is missing either the context or the facts to provide the level of feedback that a colleague would. Rather, what we get is “instant mediocrity,” an answer that matches what a mediocre colleague would provide if given a half-day, full-day, or week to reply. If you’re a writer, you will quickly notice that the essays and poems that ChatGPT writes are often structurally accurate, but lack the insight and skill needed to write a university-level assignment.

And the truth is that instant mediocrity is often a useful level of skill. If one is trying to answer a question that has one of three or four answers, a technology that is mediocre at that skill will probably give you the right answer. If you want to provide a standard answer for structuring a project or setting up a spreadsheet to support a process, a mediocre response is good enough. If you want to remember all of the standard marketing tools used in a business, a mediocre answer is just fine. As long as you don’t need inspired answers, mediocrity can provide a lot of value.

A few things for you to consider as your organization starts using ChatGPT. Just like when the iPhone launched 16 years ago, you don’t really have a choice on whether your company is using ChatGPT or not. All you can do is figure out how to manage and govern the use. Our recommendations typically take one of three major categories: Strategy, Productivity, and Cost. Given the relatively low price of ChatGPT both as a consumer-grade tool and as an API where current pricing is typically a fraction of the cost of doing similar tasks without AI, the focus here will be on strategy and productivity

Strategy – Every software company now has a ChatGPT roadmap. And even mid-sized companies typically have hundreds of apps under management. So, now there will 200, 500, or however many potential ways for employees to use ChatGPT over the next 12 months. Figure out how GPT is being integrated into the software and whether GPT is being directly used to process data or indirectly to help query, index, or augment data.

Strategy – Identify the value of mediocrity. The average large enterprise getting mediocrity from a query-writing or indexing perspective is often a much higher standard than the mediocrity of text autocompletion. Seek mediocrity in tasks where the average online standard is already higher than the average skill within your organization.

Strategy – How will you keep proprietary data out of Gen AI? – Most famously, Samsung recently had a scare when it saw how AI tools were echoing and using proprietary information. How are companies both ensuring that they have not put new proprietary data into generative AI tools for potential public use and that their existing proprietary data was not used to train generative AI models? This governance will require greater visibility from AI providers to provide detail on the data sources that were used to build and train the models we are using today.

Strategy – On a related note, how will you keep commercially used intellectual property from being used by Gen AI? Most intellectual property covered by copyright or patent does not allow for commercial reuse without some form of license. Do companies need to figure out some way of licensing data that is used to train commercial models? Or can models verify that they have not used any copyrighted data? Even if users have relinquished copyright for the specific social networks and websites that they initially wrote for, this does not automatically give OpenAI and other AI providers the same license to use the same data for training. And can AIs own copyright? Once large content providers such as music publishers, book publishers, and entertainment studios realize the extent to which their intellectual property is at risk with AI and somebody starts making millions with AI-enabled content that strongly resembles any existing IP, massive lawsuits will ensue. If an original provider, be ready to defend IP. If using AI, be wary of actively commercializing or claiming ownership of AI-enabled work for anything other than parody or stock work that can be easily replaced.

Productivity – Is code enterprise-grade: secure, compliant, and free of private corporate metadata? One of the most interesting new use cases for generative AI is the ability to create working code without having prior knowledge of the programming language. Although generative AI currently cannot create entire applications without significant developer engagement, it can quickly provide specifically defined snippets, functions, and calls that may have been a challenge to explicitly search for or to find on a Stack Overflow or in git libraries. As this use case continues to proliferate, coders need to understand their auto-generated code well enough to check for security issues, performance challenges, appropriate metadata and documentation, and reusability based on corporate service and workload management policies. But this will increasingly allow developers to shift from directly coding every line to editing and proofing the quality of code. In doing so, we may see a renaissance of cleaner, more optimized, and more reusable code for internal applications as the standard for code now becomes “instantly mediocre.”

Productivity – Quality, not Quantity. There are hundreds of AI-enabled tools out there to provide chat, search-based outputs, generative text and graphics, and other AI capabilities. Measure twice and cut once in choosing the tools that you use to help you. It’s better to find the five tools that matter than the 150 tools that don’t maximize the mediocre output that you receive.

Productivity – Are employees trained on fact-checking and proofing their Gen AI outputs? Whether employees are creating text, getting sample code, or prompting new graphics and video, the outputs need to be verified against a fact-based source to ensure that the generative AI has not “hallucinated” or autocompleted details that are incorrect. Generative AI seeks to provide the next best word or the next best pixel that is most associated with the prompt that it has been given, but there are no guarantees that this autocompletion will be factual just because it is related to the prompt at hand. Although there is a lot of work being done to make general models more factual, this is an area where enterprises will likely have to build their own, more personalized models over time that are industry, language, and culturally specific. Ideally, ChatGPT and other Generative AI tools are a learning and teaching experience, not just a quick cheat.

Productivity – How will Generative AI help accelerate your process and workflow automation? Currently, automation tends to be a rules-driven set of processes that lead to the execution of a specific action. But generative AI can do a mediocre job of translating intention into a set of directions or a set of tasks that need to be completed. While generative AI may get the order of actions wrong or make other text-based errors that need to be fact-checked by a human, the AI can accelerate the initial discovery and staging of steps needed to complete business processes. Over time, this natural language generation-based approach to process mapping is going to become the standard starting point for process automation. Process automation engineers, workflow engineers, and process miners will all need to learn how prompts can be optimized to quickly define processes.

Cost – What will you need to do to build your own AI models? Although the majority of ChatGPT announcements focus on some level of integration between an existing platform or application and some form of GPT or other generative AI tool, there are exceptions. BloombergGPT provides a model based on all of the financial data that it has collected to help support financial research efforts. Both Stanford University Alpaca and Databricks Dolly have provided tools for building custom large language models. At some point, businesses are going to want to use their own proprietary documents, data, jargon, and processes to build their own custom AI assistants and models. When it comes time for businesses to build their own billion-parameter, billion-word models, will they be ready with the combination of metadata definitions, comprehensive data lake, role definitions, compute and storage resources, and data science operationalization capabilities to support these custom models? And how will companies justify the model creation cost compared to using existing models? Amalgam Insights has some ideas that we’ll share in a future blog. But for now, let’s just say that the real challenge here is not in defining better results, but in making the right data investments now that will empower the organization to move forward and take the steps that thought leaders like Bloomberg have already pursued in digitizing and algorithmically opening up their data with generative AI.

Although we somewhat jokingly call ChatGPT “instant mediocrity,” this phrase should be taken seriously both in acknowledging the cadence and response quality that is created. Mediocrity can actually be a high level of performance by some standards. Getting to a response that an average 1x’er employee can provide immediately is valuable as long as it is seen for what it is rather than unnecessarily glorified or exaggerated. Treat it as an intern or student-level output that requires professional review rather than an independent assistant and it will greatly improve your professional output. Treat it as an expert and you may end up needing legal assistance. (But maybe not from this lawyer. )