The past week has been “Must See TV” in the tech world as AI darling OpenAI provided a season of Reality TV to rival anything created by Survivor, Big Brother, or the Kardashians. Although I often joke that my professional career has been defined by the well-known documentaries of “The West Wing,” “Pitch Perfect,” and “Sillcon Valley,” I’ve never been a big fan of the reality TV genre as the twist and turns felt too contrived and over the top… until now.

Starting on Friday, November 17th, when The Real Housewives of OpenAI started its massive internal feud, every organization working on an AI project has been watching to see what would become of the overnight sensation that turned AI into a household concept with the massively viral ChatGPT and related models and tools.

So, what the hell happened? And, more importantly, what does it mean for the organizations and enterprises seeking to enter the Era of AI and the combination of generative, conversational, language-driven, and graphic capabilities that are supported with the multi-billion parameter models that have opened up a wide variety of business processes to natural language driven interrogation, prioritization, and contextualization?

The Most Consequential Shake Up In Technology Since Steve Jobs Left Apple

The crux of the problem: OpenAI, the company we all know as the creator of ChatGPT and the technology provider for Microsoft’s Copilots, was fully controlled by another entity, OpenAI, the nonprofit. This nonprofit was driven by a mission of creating general artificial intelligence for all of humanity. The charter starts with“OpenAI’s mission is to ensure that artificial general intelligence (AGI) – by which we mean highly autonomous systems that outperform humans at most economically valuable work – benefits all of humanity. We will attempt to directly build safe and beneficial AGI, but will also consider our mission fulfilled if our work aids others to achieve this outcome.”

There is nothing in there about making money. Or building a multi-billion dollar company. Or providing resources to Big Tech. Or providing stakeholders with profit other than highly functional technology systems. In fact, further in the charter, it even states that if a competitor shows up with a project that is doing better at AGI, OpenAI commits to “stop competing with and start assisting this project.”

So, that was the primary focus of OpenAI. If anything, OpenAI was built to prevent large technology companies from being the primary force and owner of AI. In that context, four of the six board members of OpenAI decided that open AI‘s efforts to commercialize technology were in conflict with this mission, especially with the speed of going to market, and the shortcuts being made from a governance and research perspective.

As a result, they ended up firing both the CEO, Sam, Altman and removed President COO Greg Brockman, who had been responsible for architecting that resources and infrastructure associated with OpenAI, from the board. That action begat this rapid mess and chaos for this 700+ employee organization which was allegedly about to see an 80 billion dollar valuation

A Convoluted Timeline For The Real Housewives Of Silicon Valley

Friday: OpenAI’s board fires its CEO and kicks its president Greg Brockman off the board. CTO Mira Murati, who was called the night before, was appointed temporary CEO. Brockman steps down later that day.

Saturday: Employees are up in arms and several key employees leave the company, leading to immediate action by Microsoft going all the way up to CEO Satya Nadella to basically ask “what is going on? And what are you doing with our $10 billion commitment, you clowns?!” (Nadella probably did not use the word clowns, as he’s very respectful.)

Sunday: Altman comes in the office to negotiate with Microsoft and OpenAI’s investors. Meanwhile, OpenAI announces a new CEO, Emmett Shear, who was previously the CEO of video game streaming company Twitch. Immediately, everyone questions what he’ll actually be managing as employees threaten to quit, refuse to show up to an all-hands meeting, and show Altman overwhelming support on social media. A tumultuous Sunday ends with an announcement by Microsoft that Altman and Brockman will lead Microsoft’s AI group.

Monday: A letter shows up asking the current board to resign with over 700 employees threatening to quit and move to the Microsoft subsidiary run by Altman and Brockman. Co-signers include board member and OpenAI Ilya Sutskever, who was one of the four board votes to oust Altman in the first place.

Tuesday: The new CEO of OpenAI, Emmett Shear, states that he will quit if the OpenAI board can’t provide evidence of why they fired Sam Altman. Late that night, Sam Altman officially comes back to OpenAI as CEO with a new board consisting initially of Bret Taylor, former co-CEO of Salesforce, Larry Summers (former Secretary of the Treasury), and Adam d’Angelo, one of the former board members who voted to figure Sam Altman. Helen Toner of Georgetown and Tasha McCauley, both seen as ethical altruists who were firmly aligned with OpenAI’s original mission, both step down from the board.

Wednesday: Well, that’s today as I’m writing this out. Right now, there are still a lot of questions about the board, the current purpose of OpenAI, and the winners and losers.

Keep In Mind As We Consider This Wild And Crazy Ride

OpenAI was not designed to make money. Firing Altman may have been defensible from OpenAI’s charter perspective to build safe General AI for everyone and to avoid large tech oligopolies. But if that’s the case, OpenAI should not have taken Microsoft’s money. OpenAI wanted to have its cake and eat it as well with a board unused to managing donations and budgets at that scale.

Was firing Altman even the right move? One could argue that productization puts AI into more hands and helps prepare society for an AGI world. To manage and work with superintelligences, one must first integrate AI into one’s life and the work Altman was doing was putting AI into more people’s hands in preparation for the next stage of global access and interaction with superintelligence.

At the same time, the vast majority of current OpenAI employees are on the for-profit side and signed up, at least in part, because of the promise of a stock-based payout. I’m not saying that OpenAI employees don’t also care about ethical AI usage, but even the secondary market for OpenAI at a multi-billion dollar valuation would help pay for a lot of mortgages and college bills. But tanking the vast majority of employee financial expectations is always going to be a hard sell, especially if they have been sold on a profitable financial outcome.

OpenAI is expensive to run: probably well over 2 billion dollars per year, including the massive cloud bill. Any attempt to slow down AI development or reduce access to current AI tools needs to be tempered by the financial realities of covering costs. It is amazing to think that OpenAI’s board was so naïve that they could just get rid of the guy who was, in essence, their top fundraiser or revenue officer without worrying about how to cover that gap.

Primary research versus go-to-market activities are very different. Normally there is a church-and-state type of wall between these two areas exactly because they are to some extent at odds with each other. The work needed to make new, better, safer, and fundamentally different technology is often conflicted with the activity used to sell existing technology. And this is a division that has been well established for decades in academia where patented or protected technologies are monetized by a separate for-profit organization.

The Effective Altruism movement: this is an important catchphrase in the world of AI, as it is not just defined as a dictionary definition. This is a catchphrase for a specific view of developing artificial general intelligence (superintelligences beyond human capacity) with the goal of supporting a population of 10^58 millennia from now. This is one extreme of the AI world, which is countered by a “doomer” mindset thinking that AI will be the end of humanity.

Practically, most of us are in between with the understanding that we have been using superhuman forces in business since the Industrial Revolution. We have been using Google, Facebook, data warehouses, data lakes, and various statistical and machine learning models for a couple of decades that vastly exceed human data and analytic capabilities.

And the big drama question for me: What is Adam d’Angelo still doing on the board as someone who actively caused this disaster to happen? There is no way to get around the fact that this entire mess was due to a board-driven coup and he was part of the coup. It would be surprising to see him stick around for more than a few months especially now that Bret Taylor is on board, who provides an overlap of experiences and capabilities that d’Angelo possesses, but at greater scale.

The 13 Big Lessons We All Learned about AI, The Universe, and Everything

First, OpenAI needs better governance in several areas: board, technology, and productization.

- Once OpenAI started building technologies with commercial repercussions, the delineation between the non-profit work and the technology commercialization needed to become much clearer. This line should have been crystal clear before OpenAI took a $10 billion commitment from Microsoft and should have been advised by a board of directors that had any semblance of experience in managing conflicts of interest at this level of revenue and valuation. In particular, Adam d’Angelo as the CEO of a multi-billion dollar valued company and Helen Toner of Georgetown should have helped to draw these lines and make them extremely clear for Sam Altman prior to this moment.

- Investors and key stakeholders should never be completely surprised by a board announcement. The board should only take actions that have previously been communicated to all major stakeholders. Risks need to be defined beforehand when they are predictable. This conflict was predictable and, by all accounts, had been brewing for months. If you’re going to fire a CEO, make sure your stakeholders support you and that you can defend your stance.

- You come at the king, you best not miss.” As Omar said in the famed show “The Wire,” you cannot try to take out the head of an organization unless your followup plan is tight.

- OpenAI’s copyright challenges feel similar to when Napster first became popular as a streaming platform for music. We had to collectively figure out how to avoid digital piracy while maintaining the convenience that Napster provided for supporting music and sharing other files. Although the productivity benefits make generative AI worth experimenting with, always make sure that you have a back up process or capability for anything supported with generative AI.

OpenAI and other generative AI firms have also run into challenges regarding the potential copyright issues associated with their models. Although a number of companies are indemnifying clients from damages associated with any outputs associated with their models, companies will likely still have to stop using any models or outputs that end up being associated with copyrighted material.

From Amalgam Insights’ perspective, the challenge with some foundational models is that training data is used to build the parameters or modifiers associated with a model. This means that the copyrighted material is being used to help shape a product or service that is being offered on a commercial basis. Although there is no legal precedent either for or against this interpretation, the initial appearance of this language fits with the common sense definitions of enforcing copyright on a commercial basis. This is why the data collating approach that IBM has taken to generative AI is an important differentiator that may end up being meaningful.

- Don’t take money if you’re not willing to accept the consequences. This is a common non-profit mistake to accept funding and simply hope it won’t affect the research. But the moment research is primarily dependent on one single funder, there will always be compromises. Make sure those compromises are expressly delineated in advance and if the research is worth doing under those circumstances.

- Licensing nonprofit technologies and resources should not paralyze the core non-profit mission. Universities do this all the time! Somebody at OpenAI, both in the board and at the operational level, should be a genius at managing tech transfer and commercial utilization to help avoid conflicts between the two institutions. There is no reason that the OpenAI nonprofit should be hamstrung by the commercialization of its technology because there should be a structure in place to prevent or minimize conflicts of interest other than firing the CEO.

Second, there are also some important business lessons here.

- Startups are inherently unstable. Although OpenAI is an extreme example, there are many other more prosaic examples of owners or boards who are unpredictable, uncontrollable, volatile, vindictive, or otherwise unmanageable in ways that force businesses to close up shop or to struggle operationally. This is part of the reason that half of new businesses fail within five years.

- Loyalty matters, even in the world of tech. It is remarkable that Sam Altman was backed by over 90% of his team on a letter saying that they would follow him to Microsoft. This includes employees who were on visas and were not independently rich, but still believed in Sam Altman more than the organization that actually signed their paychecks. Although it never hurts to also have Microsoft’s Kevin Scott and Satya Nadella in your corner and to be able to match compensation packages, this also speaks to the executive responsibility to build trust by creating a better scenario for your employees than others can provide. In this Game of Thrones, Sam Altman took down every contender to the throne in a matter of hours.

- Microsoft has most likely pulled off a transaction that ends up being all but an acquisition of OpenAI. It looks like Microsoft will end up with the vast majority of OpenAI’s‘s talent as well as an unlimited license to all technology developed by OpenAI. Considering that OpenAI was about to support a stock offering with an $80 billion market cap, that’s quite the bargain for Microsoft. In particular, Bret Taylor’s ascension to the board is telling as his work at Twitter was in the best interests of the shareholders of Twitter in accepting and forcing an acquisition that was well in excess of the publicly-held value of the company. Similarly, Larry Summers, as the former president of Harvard University, is experienced in balancing non-profit concerns with the extremely lucrative business of Harvard’s endowment and intellectual property. As this board is expanded to as many as nine members, expect more of a focus on OpenAI as a for-profit entity.

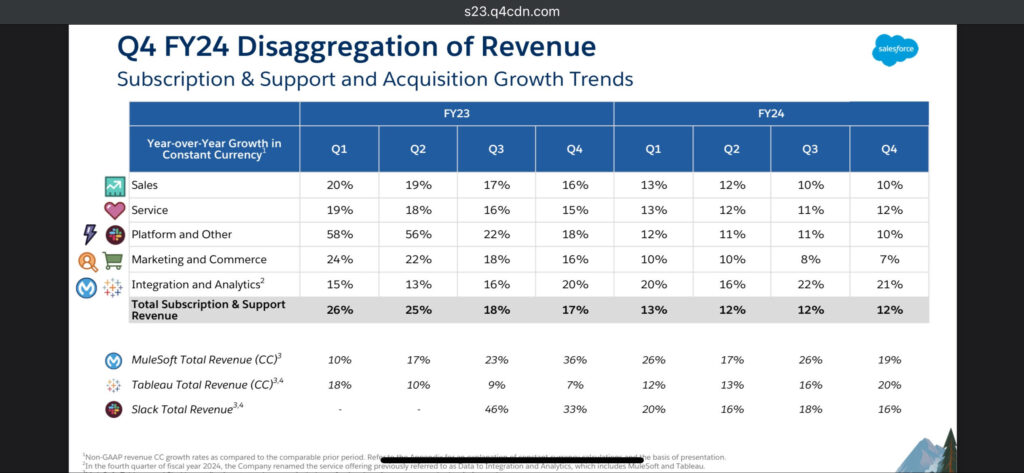

- With Microsoft bringing OpenAI closer to the fold, other big tech companies that have made recent investments in generative AI now have to bring those partners closer to the core business. Salesforce, NVIDIA, Alphabet, Amazon, Databricks, SAP, and ServiceNow have all made big investments in generative AI and need to lock down their access to generative AI models, processors, and relevant data. Everyone is betting on their AI strategy to be a growth engine over the next five years and none can afford a significant misstep.

- Satya Nadella’s handling of the situation shows why he is one of the greatest CEOs in business history. This weekend could have easily been an immense failure and a stock price toppling event for Microsoft. But in a clutch situation, Satya Nadella personally came in with his executive team to negotiate a landing for openAI, and to provide a scenario that would be palatable both to the market and for clients. The greatest CEOs have both the strategic skills to prepare for the future and the tactical skills to deal with immediate crisis. Nadella passes with flying colors on all accounts and proves once again that behind the velvet glove of Nadella’s humility and political savvy is an iron fist of geopolitical and financial power that is deftly wielded.

- Carefully analyze AI firms that may have similar charters for supporting safe AI, and potentially slowing down or stopping product development for the sake of a higher purpose. OpenAI ran into challenges in trying to interpret its charter, but the charter’s language is pretty straightforward for anyone who did their due diligence and took the language seriously. Assume that people mean what they say. Also, consider that there are other AI firms that have similar philosophies to OpenAI, such as Anthropic, which spun off of OpenAI for reasons similar to the OpenAI board reasoning of firing Sam Altman. Although it is unlikely that Anthropic (or large firms with safety-first philosophies like Alphabet and Meta’s AI teams) will fall apart similarly, the charters and missions of each organization should be taken into account in considering their potential productization of AI technologies.

- AI is still an emerging technology. Diversify, diversify, diversify. It is important to diversify your portfolio and make sure that you were able to duplicate experiments on multiple foundation models when possible. The marginal cost of supporting duplicate projects pales in comparison to the need to support continuity and gain greater understanding of the breath of AI output possibilities. With the variety of large language models, software vendor products, and machine learning platforms on the market, this is a good time to experiment with multiple vendors while designing process automation and language analysis use cases.